REU Project on Wheelchair Detection

The Research Experience for Undergraduates (REU) program is exactly as the name implies. To find out more, just go to the UCF Computer Vision REU Home Page. To compare these results with my old results, go to my old reu page.

| Name | Ashish Myles |

|---|---|

| Project | Wheelchair Detection and Tracking in 3D in a Calibrated Environment |

| Secret | The wheelchair is modeled in 3D by two parallel circles and a region vertically above them representing face. New areas in the image are extracted using background subtraction, and a simplistic shadow removal algorithm is used to more accurately locate the bottom of the wheels to locate them in 3D using floor calibration. The projection of the wheels in the image is extracted using an efficient variation of the Hough Transform, while the face region is detected using skin detection. The ellipses are then pre-processed for normalization and simplification of the 3D math, and their world coordinates are derived via the calibration of the floor. Ellipses and skin regions are pieced together to form the wheelchair model, which is tracked from frame to frame. In the cases where the ellipse project of the wheel is not visible due to the angle of the wheelchair, optical flow is used as a guide to predict the wheelchair motion. |

| Progress | I have improved the detection part from before. I have used a better background subtraction and shadow removal method described briefly in Pentland's Pfinder paper. Also, I have improved the accuracy of the wheel detection by attempting to location the second wheel in the edge image after locating the first wheel. Additionally, I use the tilt of the ellipse to determine which way the wheel is pointing. Wheelchair tracking is still to be done. |

| Thanks |

|

Background Subtraction

-

-  =

=

The shadow removal results are considerably better than the previous method I used. They also fit the ellipse to the wheel a little better by removing the shadow near the ground more accurately. The shadow areas are in gray, while the rest of the foreground area is in black.

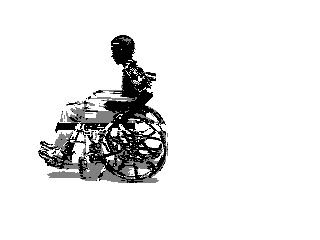

Skin Detection

+

+  →

→

Close skin regions are successfully merged as seen above. The hand is seen as part of the arm even though the watch separates the two regions. Even though the arms are not used in the wheelchair detection algorithm, merging is very important in the cases that the face is separated into smaller regions due to facial hair.

Ellipse Detection

→

→

The ellipses actually turn out to be a little smaller than the outer periphery of the wheel. This is okay since they will be expanded to fit to the floor in the next step so that the wheel can be located in 3D via floor calibration.

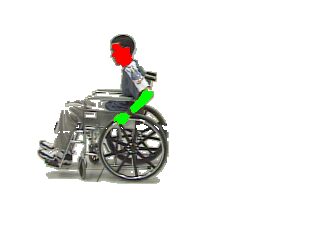

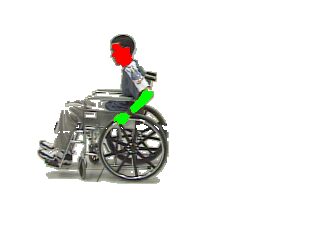

Wheelchair Detection

+

+  =

=

The first and second lines refer to the closer and the further wheel, respectively. They refer to the 3D coordinates of the center of the wheel, followed by the radius and the angle of orientation with respect to the wall behind. The third line gives the 3D coordinates of the centroid of the face. All the values are in feet and are with respect to the bottom of the camera stand, and the 3D coordinate system is parallel to the floor. The first and last horizontal line on the floor are eight and sixteen feet, respectively, from the 3D origin. The vertical line on the floor is approximately where the z-axis of the coordinate system lies. The actual radius of the wheel is one foot. It is interesting to notice that the y-coordinates off the wheel centers are approximately equal to the radii of the wheels. They are not exactly equal since they are calculated in two completely different ways.